A common data analysis problem that I get is taking data from PDF documents and converting it to CSV.

Now this can be easy if you are dealing with one or two PDF, but for my case I had about a dozen or so.

(Quite painful!)

Now when something that I would have to do manually more than 5 times, I would try to automate it.

In this blog post, I will show how to extract PDF data and convert it to CSV using Python. We will be using the PdfDodo API to do so.

Pdfdodo provides you the ability to extract data from PDF using custom AI and OCR algorithms.

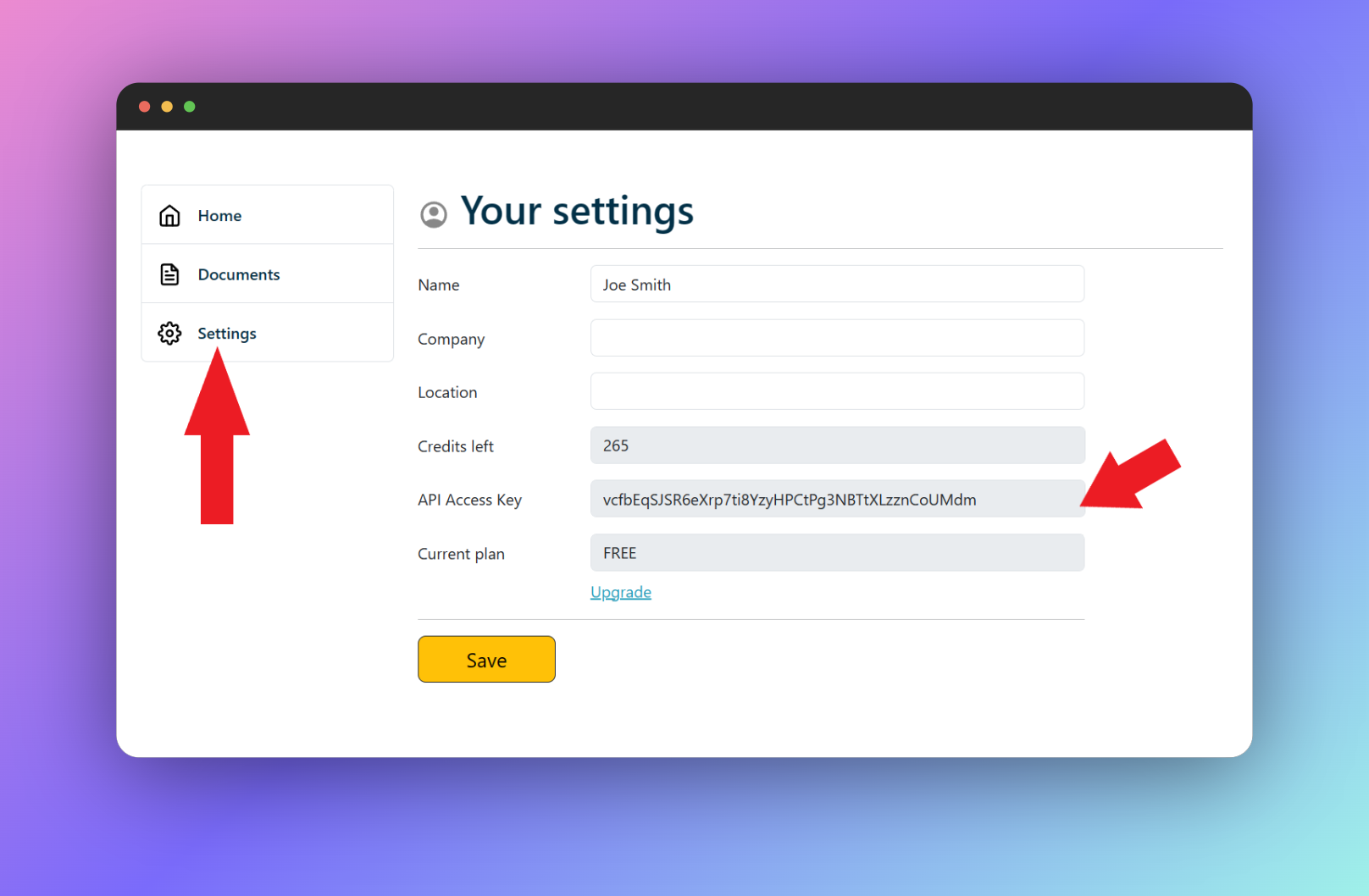

PdfDodo uses a API keys to allow you to access the API. You can get your API key by going to the “Settings” section.

After retrieving your API key, you can pass the token to the API in the HTTP Authorization Header using X-API-KEY .

X-API-KEY:

The next step after we have successfully grabbed our API key, we can upload our document and then call the analyze endpoint to get the data.

Make sure to add X-API-KEY in the Authorization Header.

To upload our PDF file, we can do this in Python as follows.

pip install requestsAfter we have installed the requests library, use the following code to call the Upload file endpoint.

import requests url = "https://app.pdfdodo.com/api/uploadfile" headers = < "Content-Type": "multipart/form-data", "x-api-key": "YOUR_API_KEY" > files = < 'file': ('file.pdf', open('/path/to/your/file.pdf', 'rb')) > response = requests.post(url, headers=headers, files=files)The response will be a JSON result. For example:

< "documentId": "123e4567-e89b-12d3-a456-426614174000", "documentName": "your-test-file.pdf" >Keep note of the documentId .

The last step is to do a GET request to the analyze endpoint, /api/analyze , to get the document data.

For example, lets say our document id is 123e4567-e89b-12d3-a456-426614174000 , we can do the following Python code to analyze and get the document data:

import requests # API endpoint url = "https://app.pdfdodo.com/api/analyze?documentid=123e4567-e89b-12d3-a456-426614174000" # Headers headers = < "Content-Type": "application/json", "x-api-key": "YOUR_API_KEY_HERE" > # Making the GET request response = requests.get(url, headers=headers) # Printing the response print(response.text)This will give you a JSON response of the data in your PDF:

< "tables": [ < "title": "The title of your table", "tableId": "a0f229f6-ade7-4637-bbff-e31fbbd64ec5", "pageNumber": 1, "rows": [ [ "0", "Money In ", "$1,880.00 " ], [ "1", "Money Out ", "$150.25 " ], [ "2", "You Received ", "$1,729.75 " ] ], "left": 0.6953286, "top": 0.2655254, "width": 0.2580709, "height": 0.11655326, "mergedTables": [] > ] >After you have the response, the next task is to save it as CSV.

We will need the json module and csv module.

An example of the code is below.

import json import csv # Your JSON response from the API call # . # . # . # Parse the JSON string data = json.loads(response.text) # Extract rows from table 1 rows = data["tables"][0]["rows"] # Write to CSV with open("output.csv", "w", newline="") as csvfile: writer = csv.writer(csvfile) writer.writerows(rows)The above code takes the rows from table 1 ( data["tables"][0]["rows"] ) and then use the csv library to write out the rows.

After executing the above code, you will get an output.csv file in the same directory as the script.

If you do not want to use a web API and prefer open source, the best library would be Tabula.

Now since we are in Python we need to use tabula-py . Since Tabula was written in Java, tabula-py is just wrapper.

tabula-py is a simple Python wrapper of tabula-java, which can read table of PDF. You can read tables from PDF and convert them into pandas’ DataFrame. tabula-py also converts a PDF file into CSV/TSV/JSON file.`

https://pypi.org/project/tabula-py/

To get started, we need to install the Python tabula-py library with the following command:

pip install tabula-pyNow we also need to make sure that Java is installed. Additionally check that you can use java command on your terminal (eg setting the PATH ).

import tabula # Read pdf into list of DataFrame dfs = tabula.read_pdf("test.pdf", pages='all') # Read remote pdf into list of DataFrame dfs2 = tabula.read_pdf("") # convert PDF into CSV file tabula.convert_into("test.pdf", "output.csv", output_format="csv", pages='all') # convert all PDFs in a directory tabula.convert_into_by_batch("input_directory", output_format='csv', pages='all')I found the results of Tabula to be ok. The biggest issue is that it does not work with scanned PDFs.

There are some issues I found:

PdfPlumber is another open source library that you can convert PDF to CSV. Unlike Tabula where the csv export function is built-in, we need to import the csv module.

To get started, install with pip

pip install pdfplumberWe then can use the following code:

import pdfplumber import csv def extract_tables_from_pdf_to_csv(pdf_path, csv_path): # Open the PDF file with pdfplumber.open(pdf_path) as pdf: # This will accumulate all table rows from all pages all_rows = [] # Iterate over all the pages in the PDF for page in pdf.pages: # Extract tables from the current page tables = page.extract_tables() # Append rows from each table to all_rows for table in tables: all_rows.extend(table) # Write the accumulated rows to a CSV file with open(csv_path, 'w', newline='') as csv_file: writer = csv.writer(csv_file) writer.writerows(all_rows) # Usage pdf_file_path = 'path_to_input.pdf' csv_file_path = 'path_to_output.csv' extract_tables_from_pdf_to_csv(pdf_file_path, csv_file_path)I found this to be a bit better than Tabula, but not by a big margin. Still it does not work with scanned PDFs.

Additionally, there are more dependencies we have to manage. Pdfplumber internally uses pdf2image, which requires poppler-utils. Ensure you have it installed in your system.

I found PdfPlumber does not do a great job in the following scenarios:

Overall, if you want to automate PDF to CSV conversion, then use the PDFDodo API with Python using the request and csv modules.

It handles scanned and text PDFs and the results are pretty accurate.

As an alternative, we can use open source libraries like tabula-py or pdfplumber. The problem is that they require a bit of setup time and tweeking to get good results. Also they do not work with scanned PDFs!

G'day! I am Ken Ito a software engineer based in Australia. I have worked as a software engineer for more than 10 years ranging from roles such as tech lead, data analyst to individual contributor. I have experience in creating code (python, javascript) to extract data from PDFs for ETL and financial statement analysis for accounting purposes.

During those years, I have worked for Government departments, companies like NTT (Nippon Telegraph and Telephone), Bupa and not-for-profit organizations.

Follow along on Twitter and LinkedIn